AI-centric Computational Architectures

In which we ponder on various futures in which machine learning models become integral components of software stacks and what it would do to computational architectures

In his introduction to Large Language Models video, Andrej Karpathy (of OpenAI and Tesla fame) introduces the concept of an “LLM OS” in which the LLM is not just a piece of a larger software stack (as it’s normally used today) but the very center of it.

The key idea here is that the LLM is the driver. As it receives instructions from the interaction of the user, it decides what to do with them and invokes other peripherals (here depicted as high level components like “browsers” or “programming interpreters”). It is a fascinating picture because while it resembles the traditional Von Neumann architecture we’re used to, it is extremely different from it.

How is it different? Well, for one, many of these components are inherently stochastic (that is noisy; have a random component). We give it the same input, we might not get the same output. We are already used to this kind of behavior from computational tools like search engines, but we’re very much NOT interested in this kind of behavior when we’re dealing with our banking apps or spreadsheets.

There are other solutions emerging in this space like LangChain, LlamaIndex, Google Labs’ Breadboard and YouAI Mindstudio (just to name the ones I know of) in which the CPU is really a program that we write which uses the LLM as a co-processor.

In my mind, I see the two architectures as “LLM as CPU” vs. “LLM as GPU”.

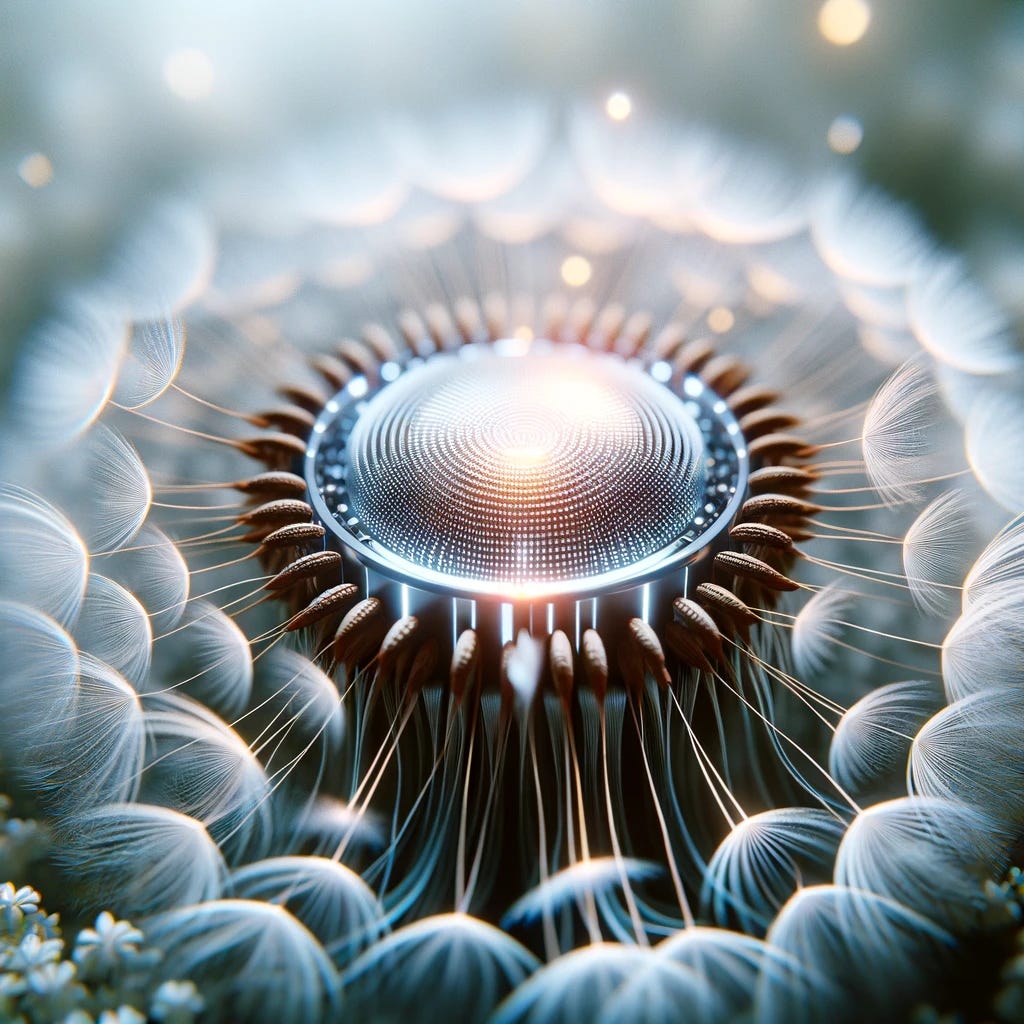

If the LLM is the CPU, we don’t really program it but we influence it, we nudge it. Here we can see OpenAI taking this approach with their concept of a GPT app store in which people make their own GPTs in which they can fine tune the behavior of a general purpose LLM with tailored fine tuning. I can see this approach being empowering for those without any computing skills, a sort of “AI Visual Basic”. But just like Visual Basic, I fear it will build a bunch of toys that look very promising and can be made quickly but tend to overpromise on their ability to really fit a nuanced purpose. It doesn’t really matter for OpenAI though: lots of product dandelion seeds is exactly what they need to maximize fit for purpose for their own products. Many of your GPTs are going fail to get any traction, but it’s a sacrifice OpenAI is more than willing to make.

The other solution is a computer program, a set of hand-written rules, that mediates the user input and uses the LLM as a “co-processor” (like GPUs are used). It knows (or guesses) what each LLM can or cannot do and is able to invoke several of them or mediate with other processes, inputs and output methods, like a regular computer program would.

There is a similar “r-selection” happening here but the “stochasticity” of the plan is more isolated. I can see this solution being favored by people with even minimal programming experience and desiring more control (at least conceptually) over the operation of their product.

The Loop Gap

There is another thing that Andrej mentions in this video which I found very intriguing: system 1 vs. system 2 thinking and how it applies to neural networks with the transformers architecture (which is used by the vast majority LLMs these days).

The claim is that LLM are “instinctive” (aka System 1) thinkers. They operate in a “single forward pass” which is very similar to how our brains are able to perform complex processing in a few milliseconds independently on the complexity of the input signal. Driving a car for example, is a System 1 effort for experienced drivers.

System 2 thinking is intentional, slower, conscious and effortful. Driving a car is very much a System 2 effort for people learning how to drive for the first time. LLMs seem to struggle with this type of activity.

Gordon suggests that “agency maybe be stored in the loops” and I resonate very much with that idea. To me, LLMs feel like instinctual machines that live on the surface of the manifolds of thought. Nudging them toward the desired solution while chatting with them is vastly more intriguing than typing text into a search engine, but not always satisfying precisely because this flatness shines thru anything that requires even the simplest logical loop.

“Hold on” I hear you say, “what about GPT4 and Gemini passing all those math quizzes with zero-shot, those seem require System 2 thinking, no?”. I have mostly interacted with LLMs for coding copiloting (which I have been using daily for at least 6 months) and while they are significantly more useful than a search engine it very much feels like they are parroting what they read with just a very superficial understanding on what it is that it means. It is not just syntax understanding, it goes deeper than that, but not that much deeper. System 1 thinking in programming does not go a long way, even after recurrent nudging.

I’m instead intrigued by more sophisticated neural architectures like Alpha* series from Google DeepMind that combine Monte Carlo tree search guided by neural network models trained using reinforcement learning. While it’s pretty common these days to use reinforcement learning to fine tune LLMs (see RLHF), once the weights are fine tuned, they stay the same query after query. The trick many use is to extend the “context” of the query with the previous communication (which gives the LLM the “memory” of our conversation) but the internal state of the LLM is always the same (and in fact, they can answer different queries by different people one after the other because they have zero memory of the conversation with you in their own internal state).

But here is the part that intrigues me: clearly our brains don’t work like this. At the very least, there is a System 1 process, a System 2 process and there is a third process that is able to turn one into the other. Even if somehow we stumbled into recreating a System 1 process with neural networks using transformers, that’s a far cry from “general intelligence” which would at least need the ability to perform a few “loops” which are not just autoregressive actions.

But can these loops be either a) programmed by hand or b) chosen by the same System 1 component? Both solutions feel short of the kind of nuance and sophistication required by System 2 thought to “understand” what it is interacting with. And what about the “third component” of being able to transition System 2 thought into System 1? Is OpenAI harvesting GPTs and the millions of chats in ChatGPT enough for this? or is there something missing in this feedback loop?

I don’t really have answers to these questions but the frontier of System 2 thinking for AI models feels like the missing link to a truly intelligent and symbiotic human augmentation via computational systems.